Late last year, an international team of researchers used the world's most powerful supercomputer—Summit—to process simulated observations of the early Universe. A mighty 400 gigabytes of data were processed every single second, equivalent to streaming more than 1,600 hours of standard definition YouTube videos a second.

At the time, Professor Andreas Wicenec, director of data intensive astronomy at the International Centre for Radio Astronomy Research, said this was the first time astronomy data had been processed on this scale. Why? To check that the world's biggest radio telescope, the Square Kilometre Array (SKA), will, once built, be able to handle the deluge of astronomical data it will generate.

"We've learned a lot of lessons from the trial, including how to optimize data transfer," says Wicenec. "And completing this has told us that we can deal with the data from SKA when it comes online in the next decade. But the fact that we have needed the world's biggest supercomputer to run this test shows our needs exist at the very edge of what today's supercomputers can deliver."

The Summit supercomputer at Oak Ridge National Laboratory (ORNL). Credit: ORNL

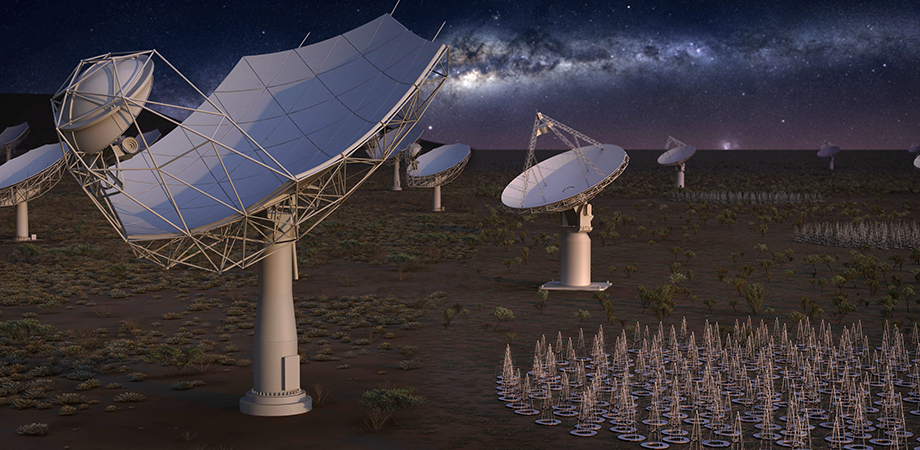

Without a doubt, SKA is set to be radio astronomy on steroids. The entire telescope will be built in two stages, with the first phase including 197 radio dishes in South Africa and some 130,000 low-frequency antennas in Western Australia. Then, the second phase will include up to one million low-frequency antennas in Australia and some 2,000 radio dishes across all of Africa. Once complete, SKA will be able to survey the sky 10,000 times faster than any existing radio telescope array.

Not surprisingly, SKA is stretching the principle of radio interferometry to the limit. Once connected via fiber-optic networks to work as a single virtual telescope, its many dishes and antennas will have a vast collecting area—yes, one square kilometre—that will enable SKA to probe the Universe in unprecedented detail.

Importantly, the array also has a massive frequency range of 50 MHz to 14 GHz, which will allow it to tackle a remarkably broad range of science. The low-frequency antennas in Australia—what is called the ‘SKA-Low' array—will cover 50 MHz to 350 MHz frequencies. Meanwhile, the dishes across Africa, called the ‘SKA-Mid' array, will monitor 350 MHz to 14 GHz frequencies.

"[This large frequency range] will enable physics exploration spanning the Epoch of Reionization, when the first luminous structures were born, as well as gravity and general relativity, through pulsars at the higher frequencies," highlights Joe McMullin, deputy director general and program director of the SKA Organisation, and based at the Jodrell Bank Discovery Centre, United Kingdom.

Critically, each site is already home to ‘precursor' telescopes that have fed into SKA's final design. For example, a 64-dish radio telescope array called MeerKAT, in South Africa's Karoo region, will carry out ground-breaking science just ahead of SKA. And at the same time, the Murchison Widefield Array, with its 256 low-frequency antenna tiles, and the Australian Square Kilometre Array Pathfinder (ASKAP), comprising 36 parabolic antennas, are acting as precursors to SKA-Low in Australia.

The Australian SKA Pathfinder (ASKAP) is one of SKA's precursor telescopes and is operated by CSIRO. Credit: CSIRO

"Many of SKA's new capabilities are actually extrapolations of existing technologies," says McMullin. "For example, SKA will use the lightweight, stiff dishes we see at MeerKAT, but these will be larger and have a more precise pointing area to give you performance at a higher frequency band. The path to SKA has very much relied on precursor instruments like this."

But why radio astronomy? To study the Universe, astronomers use the entire electromagnetic spectrum, because different wavelengths of light often reveal different astronomical objects. Optical astronomy is great for studying stars and galaxies that emit a lot of visible light; infrared telescopes may be used to study cold dust and relatively cool stars; while ultraviolet telescopes can identify the hottest, most energetic stars.

But radio astronomy has a couple of features that appeal to astronomers. For starters, radio waves can travel unimpeded by our galaxy dust, meaning distant galaxies can also be detected. And critically, hydrogen, the most abundant element in the Universe and a key building block of so many extraterrestrial objects, emits radio waves.

As Wicenec points out, "Every part of the electromagnetic spectrum tells us something about the physics that is going on out there, but using radio waves we can actually observe the transition of neutral hydrogen gas through galaxies, which can provide an interesting history of the whole Universe."

Astronomers are also hopeful that with SKA they will eventually be able to answer big questions, such as: How were the first black holes formed? Was Einstein right about gravity? How do cosmic magnets stabilize galaxies? And even, are we alone?

"With SKA we'll be able to try to understand how rocky planets form, detect the biochemical signatures of particles, and understand the characteristics of exoplanets," says McMullin. "So as well as scanning the stars with such high sensitivity, I think the strength of exploratory instruments, such as SKA, is the breadth of areas we can impact."

While the promise of ska is nothing short of phenomenal, its magnificent scale makes it the largest big data project in the known universe. The SKA-Low array will generate 5 zettabytes (106 petabytes) of data every year—an unimaginable volume when considering global Internet traffic only passed 1 zettabyte for the first time in 2016. Meanwhile, SKA-Mid is expected to produce 62 exabytes of data each year (62 × 103 petabytes), enough to fill 340,000 average laptops every day. SKA project figures also estimate that this data flood will be transmitted from each telescope at a blisteringly fast 8 terabits per second, signalling the need for extreme data processing.

Artist’s impression showing a wide-field view of how the SKA-Low dipole antennas may look when deployed. Credit: SKA Organisation

To handle the data deluge, real-time data processing will start at so-called central signal processors, located next to each array of antennas and dishes—SKA-Low and SKA-Mid—to minimize the cost of data transfer. Raw telescope data will be sent to these processors via a data transport network.

At the central signal processors, high-speed processors will make sense of the tangle of digitized astronomical signals using two key digital signal processing functions: correlation and beamforming.

Correlation combines the signals from radio telescopes into manageable-sized data packages while ensuring all signals are synchronized so that the antennas operate like a single telescope. SKA's Australia-based antennas have no moving parts, so beamforming will be used to electronically steer these aperture arrays to observe specific regions. Received signals will then be combined to simulate a large directional antenna.

Correlation and beamforming are hardly new to radio astronomy, but applying these functions to SKA's massive numbers of antennas, is.

Researchers from SKA's Central Signal Processor consortium have spent several years developing and testing the best signal-processing technologies to handle correlation and beamforming at the mighty telescope's arrays. After weighing the processing challenges, manufacturing costs, and on-site power limitations of several technologies, the researchers settled on platforms of field programmable gate arrays (FPGAs) to process massive amounts of data.

Each platform will house several FPGAs—based on either Intel or Xilinx processors—as well as memory modules. And each SKA-Low and SKA-Mid array will require hundreds of these platforms.

The central signal processors will also need to sift through the telescope data to detect signals from astronomical phenomena such as pulsars and transient events, including supernovae and gamma-ray bursts. To this end, researchers have developed novel pulsar search and timing subelements, based on a hybrid architecture of graphics processing units and FPGAs.

"SKA is going to be groundbreaking," says Wicenec. "We've never done anything on this scale before, it's totally novel and we are having to change the way we process data."

Artists rendition of the SKA-mid dishes in Africa. The 15-meter-wide dish telescopes will provide the SKA with some of its highest resolution imaging capability, working towards the upper range of radio frequencies which the SKA will cover. Credit: SKA Organisation

After initial signal processing, the telescope data will be carried along fiber optic networks that span hundreds of kilometers, to the science data processor (SDP), which for astronomers, is where the real fun will begin.

Here, the endless streams of digits will be transformed into data packages and the beginnings of images. These will then be distributed to research centers around the world, ready to form detailed astronomical images of the sky.

This seriously heavyweight processing is set to take place using two supercomputers, supported by millions of CPU processors, which is where Wicenec's Summit supercomputer test run comes in. One supercomputer in Cape Town, South Africa, will process data from SKA-Mid, while another in Perth, Australia, will process data from SKA-Low.

Each computer will be able to process in excess of 100 petaFLOPS, which is about the same processing power as around 6 million laptop CPUs. Image processing will take place in several steps, which include removing data corrupted by interference from, say, mobile phones or errors in signal transport, and then calibrating every single antenna's signal to remove the effects of instrumental variation.

The final image is derived from this refined data. Unlike your optical telescope, a radio interferometer, such as SKA, cannot obtain an image of the sky directly. So instead, the measurements from its antennas are Fourier transformed to create a representation of the sky brightness distribution of the source. This calculation is repeated over and over again to eventually give a true image of the sky.

This composite of ALMA and VLT data shows an image of the center of a galaxy named NGC 5643. ALMA data is red, VLT data is shown in blue and orange. Credit: ESO/A. Alonso-Herrero et al.; ALMA (ESO/NAOJ/NRAO)

The scale of data processing here is unprecedented, but necessary. As Wicenec highlights, "The telescopes produce data in up to 64,000 channels at the same time, and we will be generating monochromatic images for every single channel at the same time," he adds. "Processing this is very challenging."

Netherlands Institute for Radio Astronomy (ASTRON) researcher Chris Broekema agrees. Working with IBM researchers as part of a Dutch-government funded project, DOME, he and colleagues developed algorithms and software that are being used to ease the formidable data processing tasks at SKA's science data processor. For example, they devised a novel algorithm to efficiently transform data into a sky image and also used remote direct memory access to slash the energy required to process SKA's data torrent.

Thanks to such efforts, SKA researchers recently nailed down the final software and algorithms for the SDP, which combined with Wicenec's latest Summit test run, signals that the future of radio astronomy is getting close.

"Running those tests on the largest supercomputer provides an indication of the scale of the computing challenge here, but these are very nice results," says Broekema. "These [test runs] prove that the software architecture we designed in the science data processor consortium does scale to the processing capacities required by SKA."

Importantly, Broekema is also confident that the astronomy community- at-large can benefit from SKA's promising big-data-processing results. "Radio astronomy is, in general, a very open science, so ways in which we figured out these problems here can be used by other telescopes, and hopefully vice versa as well," he says.

Construction on SKA is due to start in 2021. But, perhaps predictably for the world of astronomy, questions over funding have raised doubts on the final construction details, leaving some in the community wondering if SKA's ambitions will have to be scaled back.

Still, those closely involved with SKA are adamant that it is business as usual. "We have to be very transparent about what the initial plan is and build a path to the full SKA," says McMullin. "Interferometers are inherently scalable and our ambition is clear-to build exactly the original design without compromise."

Likewise, Wicenec remains excited. "One of the great things about radio astronomy is that you don't have to build the entire telescope array to start observations," he says. "The project has a series of array releases planned, and even after the first one, which will only be a small percent of the installation, we'll be able to start observations and test the system. Scientists are very excited to be finally getting their hands on something like this."

Rebecca Pool is a science and technology writer based in Lincoln, United Kingdom.